While our current pilot projects have been getting the Discovery tool into the hands of staff, we’ve been working behind the scenes on the student version. We’re pleased to say that this is user testing well, with students particularly keen on the detailed final report. We’ll be promoting this more positively to learners as the prize at the end of their journey. Meanwhile we’re making some final improvements to the content, thanks to all the feedback from users and experts.

All this means that we’re looking for existing pilot institutions that are keen to extend the experience to students. You can express an interest by completing this sign-up form, and you can read more about what’s involved below.

About the student Digital discovery tool

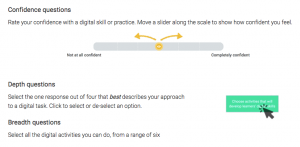

The student version is designed exactly like the staff version, as described in this blog post. So users answer questions of three types, received a detailed feedback report with suggested next steps, and links to resources.

The content is designed to be:

- Practice based: users start with practical issues, and the language is designed to be accessible and familiar

- Self-reported: we trust users to report on their own digital practices. We attach very little weight to self-reported confidence, but we do expect learners to report accurately on what they do in specific situations (depth), and on which digital activities they undertake routinely (breadth).

- Nudges and tips: the questions are designed to get users thinking about new practices and ideas before they even get to their feedback report

.

. - Generic: different subject areas present very different opportunities to develop digital skills – and make very different demands. We aim to recognise practices that have been gained on course (after all these make an important contribution to students’ digital capability!) but where possible we reference co-curricular activities that all students could access.

Student users will find only one assessment on their dashboard, unlike many staff who will find a role-specialised assessment alongside the generic assessment ‘for all’. Most of the elements in the student assessment are the same as in the staff generic assessment, mapped to the digital capabilities framework. But the content is adapted to be more relevant to students, and the resources they access are designed to be student-facing, even where they deal with many of the same issues.

The ‘learning’ element of the framework is split across two areas to reflect its importance to students. These are ‘preparing to learn‘ with digital tools (mainly issues around managing access, information, time and tasks), and ‘digital learning activities‘. There is also an additional element, ‘digital skills for work‘, that sits at the same level as ‘digital identity’ and ‘digital wellbeing’ in the framework, reflecting the importance of the future workplace in learners’ overall motivation to develop their digital skills.

The feedback encourages learners to think about which elements they want to develop further, based on their own course specialism and personal interests. Where they score low on issues such as digital identity that we know are critical, we prompt them to seek advice. So use of the discovery tool may lead to higher levels of uptake of other resources and opportunities – and we hope this is seen as a successful outcome!

There is some minor variation between the versions for HE and FE students, but we have done our best to keep these to a minimum. Our research and consultations don’t suggest that sector is an important factor in discriminating the digital skills students have or need. However, we do recognise that students vary a great deal in their familiarity with the digital systems used in colleges and universities. So we’ve designed this assessment to be suitable for students that are some way into their learning career, right up to those preparing for work.

There is some minor variation between the versions for HE and FE students, but we have done our best to keep these to a minimum. Our research and consultations don’t suggest that sector is an important factor in discriminating the digital skills students have or need. However, we do recognise that students vary a great deal in their familiarity with the digital systems used in colleges and universities. So we’ve designed this assessment to be suitable for students that are some way into their learning career, right up to those preparing for work.

It is not intended for arriving or pre-arrival students. We are considering a special assessment for students at this important transition, but there are some problems with developing this:

- These students vary much more in their experience of digital learning, so it is much harder to design content that is not too challenging (and off-putting) for some, while being too basic for others.

- We are concerned that organisations might see it as a substitute for preparing students effectively to study in digital settings – this is not a responsibility that can be delivered by a self-reflective tool.

- We have learned from students that the most important content of an induction or pre-induction ‘toolkit’ is institution-specific – depending on the specific systems and policies in place.

So at the moment our focus for arriving students is to work with Tracker users to design a digital induction ‘toolbag’. The ‘bag’ is simply a framework that colleges can use to determine for themselves – from their Tracker findings and other data – how they want arriving students to think about digital learning, and what ‘kit’ of skills, devices etc they will need. More of this over on the Tracker blog soon.

What the Digital discovery tool for students is not

As above, the Discovery tool is not an induction toolkit, or any kind of toolkit. It doesn’t deal with local systems and policies, which are critical to students becoming capable learners in your institution. It does prompt learners to think about a whole range of skills, including their general fluency and productivity with digital tools, which will support them to adopt new systems and practices while they are learning.

The Discovery tool offers access to high quality, freely-available resources, in a way that encourages learners to try them. In future you may be able to point students to your own local resources as well. But it isn’t a course of study and there’s no guarantee that learners will follow up the suggestions or resources offered.

The Discovery tool offers access to high quality, freely-available resources, in a way that encourages learners to try them. In future you may be able to point students to your own local resources as well. But it isn’t a course of study and there’s no guarantee that learners will follow up the suggestions or resources offered.

The scoring system is designed to ensure students get relevant feedback, and to motivate them to persevere to the report at the end. It has no objective meaning and should not be used for assessment, either formally or informally. We have deliberately designed the questions to be informative, so it’s always clear what ‘more advanced’ practice looks like. Users who want to gain an artificially high score can do so easily, but we don’t find this happening – so long as they see the development report as the prize, rather than the score itself.

About the pilot process

Just like the staff pilot, we’re looking for quality feedback at this stage. If you’d like to be part of the journey, we’d be delighted to have your support. You’ll need to complete this sign-up form before the end of 23rd March – it’s a simple expression of interest – after which we’ll notify participants and send out your log-in codes. Our Guidance has been updated to ensure it is also relevant to the student pilot, and you’ll have dedicated email support. Access will be open to students until the end of May 2018.

Because this is a pilot, we are still improving the content and still learning how best to introduce it to students to have the most positive outcomes. This means changes are likely. It also means we’ll ask you and your students to give feedback on your experiences, as with the staff pilot.

Join us now: complete the sign-up form.