Universities, colleges and independent providers that have signed up to pilot the Digital discovery tool will receive their access codes today. On this page you can learn more about the new Discovery tool, the Potential.ly platform, the different assessments available, and the guidance that will help you put it all into practice.

Where we are today

The open pilot is taking place in 101 organisations (57 HE, 35 FE and 9 ‘other’) between now and the end of May 2018. You can find out more about the pilot organisations and their different approaches in this blog post.

- is based on a new platform from Potential.ly

- offers completely new, user-tested questions + feedback for staff

- links to a host of new resources, all openly available

- offers further specialist questions + feedback for staff with a teaching role (in HE or in FE and Skills)

The new platform

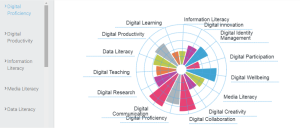

Potential.ly is working with Jisc on the development of the new platform for the Digital discovery tool. The Potential.ly team has experience of delivering an accurate personality indicator to help students understand their strengths and ‘stretch’ areas across twenty-three traits and to prepare for employment. Their platform offers a clear visual interface for the Digital discovery assessments and feedback report.

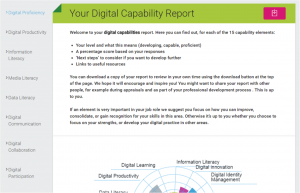

Digital capability resources are available through the dashboard in an attractive, accessible style. This screenshot shows the browse view. Answering the assessment questions creates a personalised report for each user, with recommended resources to follow up.

The new design

The Digital discovery tool is designed according to the following principles:

- Practice based: users start with practical issues as a way in to digital capability thinking

- Self-reported: we trust users to report on their own digital practices. The scoring-for-feedback system means it is pointless for users to over-rate themselves.

- Nudges and tips: the questions are designed to get users thinking about new practices and ideas, before they read a word of their feedback report.

Broad relevance: we have tried to avoid referencing specific technologies or applications to make the content relevant across a wide range of roles and organisations. Sometimes we use familiar examples to illustrate what we mean by more general terms. All users are offered an assessment called ‘digital capabilities for all’, based on the 15 elements (6 broad areas) of the Jisc Digital capability framework. There are very few differences in the questions for staff in different roles or sectors, and students answer many of the same questions too, though the feedback and resources they get are a bit different.

All users are offered an assessment called ‘digital capabilities for all’, based on the 15 elements (6 broad areas) of the Jisc Digital capability framework. There are very few differences in the questions for staff in different roles or sectors, and students answer many of the same questions too, though the feedback and resources they get are a bit different.

Some users are also offered a specialist assessment, depending on the role they choose when they sign in. At the moment we are offering additional question set for teaching staff – ‘digital capabilities for teaching’ – as this was the priority group identified in our pre-pilot consultations. We will shortly offer another specialist set for learners, and one for staff who undertake or support research. More may follow, depending on demand. Users can choose to complete only the general or only the specialised assessment, but they must complete all the questions in an assessment before they get the relevant report.

The questions

There are questions of three kinds.

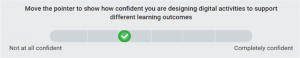

Confidence question: rate your confidence with a digital practice or skill, using a sliding scale. The opportunity for self-assessment triggers users to be reflective and helps them to feel in control of the process.

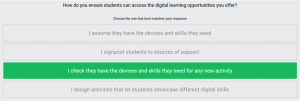

Depth question: select the one response out of four that best describes your approach to a digital task. This helps users identify their level of expertise and see how more expert practitioners behave in the same situation.

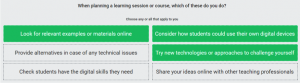

Breadth question: select the digital activities you (can) do, from a grid of six. We have tuned these so most users will be able to select at least one, but it will be difficult to select all six.

At the moment we know that some elements are harder to score highly on than others. Once we have a large data set to play with, we will be able to adjust these differences. But it may just be the case that some areas of digital capability are more challenging than others…

The feedback

Once all the questions in an assessment have been completed, users receive a visualisation of their scores, and a feedback report. The report can be downloaded to read and reference in the user’s own time – alone or with a colleague, mentor or appraiser.

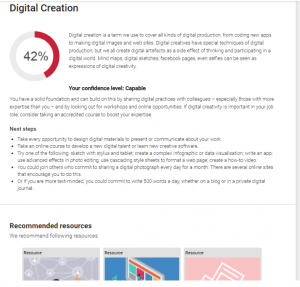

The feedback report includes, for each element assessed:

- Level: this is shown as one of ‘developing’, ‘capable’ or ‘proficient’. Some text explains what this means in each case.

- Score: this shows clearly how the user’s responses have produced the level grading

- Next steps: what people at this level could try next if they want to develop further

- Resources: links to selected resources for exploration

The resources

All the resources available through the Discovery tool – whether they are recommended in the user’s personal report, or browsed from the desktop – are freely available, quality assured, and tagged to different elements of the digital discovery framework.

Making it better

This is a pilot, which means we are still learning how the Digital discovery tool might be useful in practice, and making improvements to the content and interface. For example, there may be some changes to users’ visual experience during the next weeks and months.

- End-users are asked to fill in a short feedback form once they have completed one or more assessments.

- A smaller group of ‘pilot plus’ institutions are going through the process with additional interventions and monitoring from the Jisc Building digital capabilities team, to help us learn from them more intensively.

- All institutional leads are being asked to fill in an evaluation form and to run a focus group with staff to explore the impacts and benefits of the project.

These interventions help us to improve the Discovery tool and the support we provide for digital capabilities more generally.

What next?

In another post we will explore how to understand and use the data returns to organisational leads. We are also developing, for launch in March 2018:

- A version for students studying in HE institutions, and for students in FE and Skills

- A prototype for the Building digital capability website, to bring all our digital capability services and resources together

- Four institutional case study videos

- A senior leaders’ briefing paper

- A study into how HR departments are supporting the development of staff digital capabilities (see http://bit.ly/digcaphr for more details)

Key resources

- Guidance for institutional leads: full page of guidance to planning, implementing, engaging users and evaluating the Discovery tool at your institution

- Guidance for users: quick guide to using the Digital discovery tool

- All things ‘discovery’ are now available under the ‘Digital discovery tool’ menu at the top of this post.

- If you have any queries, contact digitalcapability@jisc.ac.uk