We now have almost 100 organisations signed up to pilot the new Digital capability discovery tool. The new platform and content will be available to trial with staff from January to May 2018, and a student version will also be available in the trial period.

If you’re not part of the pilot, you can still follow the progress of the project from this blog and on the new dedicated Discovery tool pages (launching early December).

I’ve been digging through the undergrowth of the sign-up data from all 100 pilot sites, trying to get a clearer picture of who is leading the project and what their motives are. This is a brief report from my explorations.

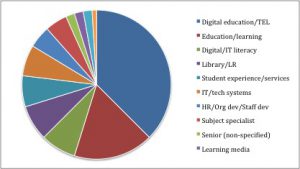

- Job roles

Lead contacts for the discovery tool have a wide and interesting range of job titles.

The largest category work in Digital education/e-learning/TEL (39) followed by Education/learning without a specific digital component (18). A separate cluster can be defined as specialists in Digital, IT or information literacy (8). These job titles included ‘Head of Digital Capability’, ‘Manager, information and digital literacy’ and ‘Digital Skills training officer’. Library/Learning Resources specialists accounted for another 8 sign-ups, Student experience/student services and IT/tech for 7 each, and HR/organisational/staff development for 5. There were also 5 subject specialist staff, of whom 3 were in English – a slightly surprising result.

These totals suggest that the bias of intended use is strongly towards teaching staff and learners.

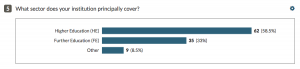

- Sector

Nearly 60% of sign-ups came from HE providers and 33% from FE. The ‘other’ responses (9) came from work-based, professional and adult learning, combined HE and FE institutions, and routes into HE.

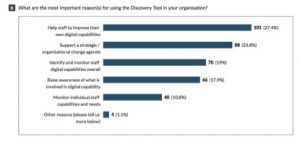

- Reasons for using the Discovery Tool

Users selected a mean of 3.5 different responses, with almost all selecting the motive to ‘Help staff to improve their own digital capabilities’. Eighty-three percent were using the discovery tool to support a strategic change agenda, and there were also high scores for identifying and monitoring staff digital capabilities overall, and for raising awareness.

It’s interesting that 37% of respondents hope to use the discovery tool to ‘monitor individual staff’, a feature that is not offered currently. We included this option to assess whether our original aims – to produce a self-reflective tool – match with those of institutional leads. It is these tensions that our evaluation will have to explore in more detail (more under ‘next steps’ below).

- Current approaches to supporting staff

There were 104 responses to the question: ‘What approaches do you currently have in place to support staff with development of their digital capabilities?’

- 43 (41% of) respondents mentioned voluntary staff development, especially of academic or teaching staff.

- 14 (13% of) respondents mentioned some form of mandatory support for staff i.e. at appraisal, PDR, or induction.

- 27 (26% of) respondents said provision for staff was currently insufficient, declining or ‘ad hoc’: Institutional support for staff to develop digital capabilities was removed about 6 years ago’; ‘[Staff training] has been very stop, start in recent years due to changes in management’; ‘There is no overall institutional approach to digital capabilities.

- 20 (19% of) respondents mentioned IT or similar training, and a further 13 (12%) mentioned TEL support

- 20 (19% of) respondents mentioned online materials, of which 15 were using subscription services such as Lynda.com and 5 had developed their own

- A sizeable number (14) had a specialist digital capability project under way, though often in the early stages.

Nine respondents mentioned the Jisc Framework or (in two cases) another digital capability framework. Frameworks were being used: to support curriculum review; in teaching staff CPD; to design training (with open badges linked to the Jisc framework); and to identify gaps in provision. Two institutions mentioned the earlier pilot of the discovery tool as a source of support, and three were using alternative self-assessment tools.

- Current collection of data about staff digital capabilities

Asked ‘Do you already collect any data about staff digital capabilities?’ 58 respondents out of 101 (57.4%) said ‘no’ or ‘not at present’ or equivalent.

Among those who responded ‘yes’ (or a qualified ‘yes’) a variety of processes were used. These included:

- Anonymous surveys

- General feedback from staff training

- Feedback from appraisal or CPD processes

- Data from staff uptake of training or online learning

- Periodic TEL/T&L reviews (inst or dept level)

- Use of the discovery tool (n=10 – though in some cases this was prospective only)

- Use of the digital student tracker (n=1)

- Teaching observations

- Numbers involved and approaches to engaging staff

Organisations had very diverse ambitions for the pilot, from user testing with 6 staff to a major roll-out in the thousands. Strategies for engaging staff were also very different in the different cases. There were 104 responses to this question, and a lot of overlap with responses to the previous question about staff support in general.

Some 27 respondents decided that communication was key, and described the channels and platforms they would use. Strategies for gaining attention included having senior managers initiate messages, engaging students to design arresting visual messages, and involving a professional promotions team. Timing was sometimes carefully considered e.g. to coincide with another initiative, or to avoid peaks of workload. In addition, 9 respondents considered the content of communications and the majority of these planned to focus on the immediate benefits to end-users: the opportunity to reflect, develop confidence, find out more, get advice and feedback, identify existing strengths. Other incentives were digital badges (2), support for an HEA fellowship application, and chocolate!

In all, 46 out of 104 or over 40% proposed completion of the discovery tool in live, shared settings, either at existing events such as meetings or at specific events designed for that purpose. Although we have emphasised the personal nature of the discovery process, it may be that shared, live events of this kind will prove more effective at developing trust, and providing on-the-spot support at a moment when users are receptive to it. One said:

‘Institutions that took part in the previous pilot of this tool identified the value of providing face to face support possibly as a lunch time ‘Digital Capabilities’ drop in to help staff complete the tool, explain those questions that staff didn’t understand and to discuss digital capabilities.’

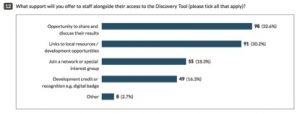

- Planned support for the Discovery Tool

Users selected a mode of 3 different kinds of support (mean 2.8) – so there is a clear understanding that users will have contextualised support of various kinds. All but 3 were planning to offer users the ‘opportunity to share and discuss their results’. Almost as many proposed to offer ‘links to local resources/development opportunities’, in addition to the resources provided as an outcome of the discovery process. Around half expected to offer support through a staff network or community of practice, and slightly fewer expected to offer accreditation or badging.

Less than 3% chose to tell us about ‘other’ forms of support, suggesting that the support activities we identified in the last pilot still cover most of the sensible options. The main form of support noted in the ‘other’ responses (not already captured in the closed options) was the offer of tailored training opportunities mapped to the discovery tool content.

What next?

Information from this report is being used to inform the guidance for pilots of the discovery tool, and to identify issues for further exploration. A following post will have more detail about both the guidance and how we will be evaluating the pilot.

An important issue for institutional leads to consider is how much they can expect – and what they would do with – fine-grained data about staff capabilities. Data such as staff take-up of training opportunities, the quality of VLE materials, or student assessments of their digital experience, may be more reliable than self-assessments by staff using the discovery tool. This information could be obtained using the digital experience tracker, also available from Jisc.

We know from the earlier evaluation that the discovery tool can help staff to become more aware of what confident digital practice looks like, and more self-directed in their professional learning. And organisations will be able to collect aggregate data about the number of staff who complete the discovery process, and other information to be determined during the pilot phase.

We will be exploring what data is really useful for planning interventions around staff digital capability, how can this be generated from the discovery tool, and how it can be reported without compromising staff willingness to engage.

For more information about the discovery tool please contact digitalcapability@jisc.ac.uk.